What is in Pips ?

INPUTS

|

OUTPUTS

|

Examples

PIPS was initially designed as an automatic parallelizer for scientific programs. It takes as input Fortran 77 codes and emphasizes interprocedural techniques for program analyses. PIPS specific interprocedural analyses are precondition and array region computations. PIPS automatically computes affine preconditions for integer scalar variables which are much more powerful than standard constant propagation or forward substitution as shown below:

I = 1

N = 10

J = 3

C P(I,J,N) {I==1, J==3, N==10}

DO WHILE (I.LT.N)

C P(I,J,N) {N==10, I<=9, 5<=2I+J, J<=3}

PRINT *, I

IF (T(I).GT.0.) THEN

J = J-2

ENDIF

I = I+1

ENDDO

C P(I,J,N) {N==10, I==10, 5<=2I+J, J<=3}

PRINT *, I, J

which are used for array and partial array interprocedural privatization as well as for interprocedural parallelization. Here, for instance, array TI is automatically privatized in spite of its initialization thru the call to PVNMUT:

!$OMP PARALLEL DO PRIVATE(J)

DO K = K1, K2

!$OMP PARALLEL DO PRIVATE(TI(1:3))

DO J = J1, JA

CALL PVNMUT(TI)

T(J,K,NN) = S*TI(1)

T(J,K,NN+1) = S*TI(2)

T(J,K,NN+2) = S*TI(3)

ENDDO

ENDDO

As mentioned above, PIPS can also be used as a reverse engineering tool. Region analyses provide useful summaries of procedure effects, while precondition-based partial evaluation and dead-code elimination reduce code size. Cloning has also be used successfully to split a routine implementing several functionalities into a set of routines implementing each exactly one functionality. Automatic cleaning of declarations is useful when commons are over-declared thru include statements.

Analyses and Transformations

All analyses and transformations are listed in the documentation of the PIPS API and of PIPS interfaces. Static analyses compute call graphs, memory effects, use-def chains, dependence graphs, interprocedural checks, transformers, preconditions, continuation conditions, complexity estimation, reduction detection, array regions (read, write, in and out, may or exact), aliases and complementary sections. The results of analyses can be displayed with the source code, with the call graph or with an elapsed interprocedural control flow graph, as texts or as graphs. The dependence graphs can also be displayed either as texts or graphs.

Several parallelization algorithms are available, including Allen&Kennedy, as well as automatic code distribution, including a prototype HPF compiler, hpfc. Different views of the parallel outputs are available: HPF, OpenMP, Fortran90, Cray Fortran. Furthermore, the polyhedral method of Pr. Feautrier also is implemented.

Program transformations include loop distribution, scalar and array privatization, atomizers (reduction of a statements to a three-address form), loop unrolling (partial and full), strip-mining, loop interchange, partial evaluation, dead-code elimination, use-def elimination, control restructuring, loop normalization, declaration cleaning, cloning, forward substitution and expression optimizations.

Workbench

PIPS is based on linear algebra techniques for analyses, e.g. dependence testing, as well as for code generation, e.g. loop interchange or tiling.

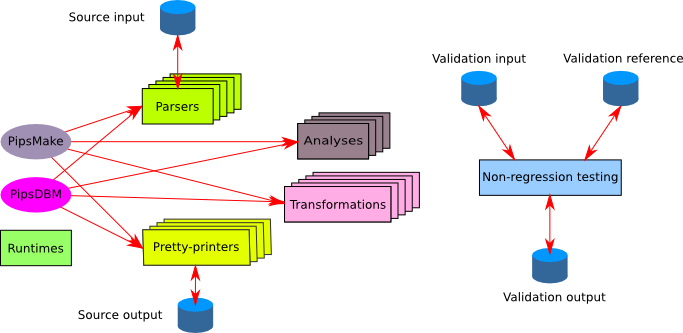

Analyses and transformations are driven by a make system, (pipsmake) which enforces consistency across analyses and modules, and results are stored for future interprocedural use in a database by a resource manager (pipsdbm). The workbench is made of phases that are called on demand to perform the analyses or transformations required by the user. Thus interprocedural requests are very easy to formulate: only the final in demand result must be specified.

PIPS Overall Structure (Note: OpenMP and MPI outputs are now available)

PIPS is built on top of two other tools. The first one is Newgen which manages data structures a la IDL. It provides basic manipulation functions for data structures described in a declaration file. It supports persistent data and type hierarchies. An introductory paper (in English) and a tutorial (in French) are available.

All PIPS data-types are based on Newgen. A description of PIPS internal representation is available in a technical report. The mapping of Fortran onto the PIPS internal representation is described in Technical Report TR E/105, Section 2.

The second tool is the Linear C3 library which handles vectors, matrices, linear constraints and structures based on these such as polyhedra. The algorithms used are designed for integer and/or rational coefficients. This library is extensively used for analyses such as dependence test, precondition and region computation, and for transformations, such as tiling, and for code generation, such as send and receive code in HPF compilation. The Linear C3 library is a joint project with IRISA and PRISM laboratories, partially funded by CNRS. IRISA contributed an implementation of Chernikova algorithm and PRISM a C implementation of PIP (Parametric Integer Programming).

Document Actions