PIPS High-Level Software Interface Pipsmake configuration

PIPS High-Level Software Interface

Pipsmake configuration

You can get a printable version of this document on

http://www.cri.ensmp.fr/pips/pipsmake-rc.htdoc/pipsmake-rc.pdf and a HTML version on http://www.cri.ensmp.fr/pips/pipsmake-rc.htdoc.

Chapter 1

Introduction

This paper describes high-level objects and functions that are potentially user-visible in a PIPS1 [?] interactive environment. It defines the internal software interface between a user interface and program analyses and transformations. This is clearly not a user guide but can be used as a reference guide, the best one before source code because PIPS user interfaces are very closely mapped on this document: some of their features are automatically derived from it.

Objects can be viewed and functions activated by one of PIPS existing user interfaces: tpips2 , the tty style interface which is currently recommended, pips3 [?], the old batch interface, improved by many shell scripts4 , wpips and epips, the X-Window System interfaces. The epips interface is an extension of wpips which uses Emacs to display more information in a more convenient way. Unfortunately, right now these window-based interfaces are no longer working and have been replaced by gpips. It is also possible to use PIPS through a Python API, pyps.

From a theoretical point of view, the object types and functions available in PIPS define an heterogeneous algebra with constructors (e.g. parser), extractors (e.g. prettyprinter) and operators (e.g. loop unrolling). Very few combinations of functions make sense, but many functions and object types are available. This abundance is confusing for casual and experiences users as well, and it was deemed necessary to assist them by providing default computation rules and automatic consistency management similar to make. The rule interpretor is called pipsmake6 and described in [?]. Its key concepts are the phase, which correspond to a PIPS function made user-visible, for instance, a parser, the resources, which correspond to objects used or defined by the phases, for instance, a source file or an AST (parsed code), and the virtual rules, which define the set of input resources used by a phase and the set of output resources defined by the phase. Since PIPS is an interprocedural tool, some real inpu resources are not known until execution. Some variables such as CALLERS or CALLEES can be used in virtual rules. They are expanded at execution to obtain an effective rule with the precise resources needed.

For debugging purposes and for advanced users, the precise choice and tuning of an algorithm can be made using properties. Default properties are installed with PIPS but they can be redefined, partly or entirely, by a properties.rc file located in the current directory. Properties can also be redefined from the user interfaces, for example with the command setproperty when the tpips interface is used.

As far as their static structures are concerned, most object types are described in more details in PIPS Internal Representation of Fortran and C code7 . A dynamic view is given here. In which order should functions be applied? Which object do they produce and vice-versa which function does produce such and such objects? How does PIPS cope with bottom-up and top-down interprocedurality?

Resources produced by several rules and their associated rule must be given alias names when they should be explicitly computed or activated by an interactive interfaceFI: I do not understand.. This is otherwise not relevant. The alias names are used to generate automatically header files and/or test files used by PIPS interfaces.

No more than one resource should be produced per line of rule because different files are automatically extracted from this one8 . Another caveat is that all resources whose names are suffixed with _file are considered printable or displayable, and the others are considered binary data, even though they may be ASCII strings.

This LATEX file is used by several procedures to derive some pieces of C code and ASCII files. The useful information is located in the PipsMake areas, a very simple literate programming environment... For instance alias information is used to generate automatically menus for window-based interfaces such as wpips or gpips. Object (a.k.a resource) types and functions are renamed using the alias declaration. The name space of aliases is global. All aliases must have different names. Function declarations are used to build a mapping table between function names and pointer to C functions, phases.h. Object suffixes are used to derive a header file, resources.h, with all resource names. Parts of this file are also extracted to generate on-line information for wpips and automatic completion for tpips.

The behavior of PIPS can be slightly tuned by using properties. Most properties are linked to a particular phase, for instance to prettyprint, but some are linked to PIPS infrastructure and are presented in Chapter 2.

1.1 Informal syntax

To understand and to be able to write new rules for pipsmake, a few things need to be known.

1.1.1 Example

The rule:

< PROGRAM.entities

< MODULE.code

< CALLEES.summary_effects

Properties are also declared in this file. For instance

1.1.2 Pipsmake variables

The following variables are defined to handle interprocedurality:

- PROGRAM:

- the whole application currently analyzed;

- MODULE:

- the current MODULE (a procedure or function);

- ALL:

- all the MODULEs of the current PROGRAM, functions and compilation units;

- ALLFUNC:

- all the MODULEs of the current PROGRAM that are functions;

- CALLEES:

- all the MODULEs called in the given MODULE;

- CALLERS:

- all the MODULEs that call the given MODULE.

These variables are used in the rule definitions and instantiated before pipsmake infers which resources are pre-requisites for a rule.

1.2 Properties

This paper also defines and describes global variables used to modify or fine tune PIPS behavior. Since global variables are useful for some purposes, but always dangerous, PIPS programmers are required to avoid them or to declare them explicitly as properties. Properties have an ASCII name and can have boolean, integer or string values.

Casual users should not use them. Some properties are modified for them by the user interface and/or the high-level functions. Some property combinations may be meaningless. More experienced users can set their values, using their names and a user interface.

Experienced users can also modify properties by inserting a file called properties.rc in their local directory. Of course, they cannot declare new properties, since they would not be recognized by the PIPS system. The local property file is read after the default property file, $PIPS_ROOT/etc/properties.rc. Some user-specified property values may be ignored because they are modified by a PIPS function before it had a chance to have any effect. Unfortunately, there is no explicit indication of usefulness for the properties in this report.

The default property file can be used to generate a custom version of properties.rc. It is derived automatically from this documentation, Documentation/pipsmake-rc.tex.

PIPS behavior can also be altered by Shell environment variables. Their generic names is XXXX_DEBUG_LEVEL, where XXXX is a library or a phase or an interface name (of course, there are exceptions). Theoretically these environment variables are also declared as properties, but this is generally forgotten by programmers. A debug level of 0 is equivalent to no tracing. The amount of tracing increases with the debug level. The maximum useful value is 9.

Another Shell environment variable, NEWGEN_MAX_TABULATED_ELEMENTS, is useful to analyze large programs. Its default value is 12,000 but it is not uncommon to have to set it up to 200,000.

Properties are listed below on a source library basis. Properties used in more than one library or used by PIPS infrastructure are presented first. Section 2.3 contains information about properties related to infrastructure, external and user interface libraries. Properties for analyses are grouped in Chapter 6. Properties for program transformations, parallelization and distribution phases are listed in the next section in Chapters 8 and 7. User output produced by different kinds of prettyprinters are presented in Chapter 9. Chaper 10 is dedicated to properties of the libraries added by CEA to implement Feautrier’s method.

1.3 Outline

Rule and object declaration are grouped in chapters: input files (Chapter 3), syntax analysis and abstract syntax tree (Chapter 4), analyses (Chapter 6), parallelizations (Chapter 7), program transformations (Chapter 8) and prettyprinters of output files (Chapter 9). Chapter 10 describes several analyses defined by Paul Feautrier. Chapter 11 contains a set of menu declarations for the window-based interfaces.

Virtually every PIPS programmer contributed some lines in this report. Inconsistencies are likely. Please report them to the PIPS team9 !

Contents

1.1 Informal syntax

1.1.1 Example

1.1.2 Pipsmake variables

1.2 Properties

1.3 Outline

2 Global Options

2.1 Fortran Loops

2.2 Logging

2.3 PIPS Infrastructure

2.3.1 Newgen

2.3.2 C3 Linear Library

2.3.3 PipsMake

2.3.4 PipsDBM

2.3.5 Top Level Control

2.3.6 Tpips Command Line Interface

2.3.7 Warning Control

2.3.8 Option for C Code Generation

3 Input Files

3.1 User File

3.2 Preprocessing and Splitting

3.2.1 Fortran case of preprocessing and splitting

3.2.1.1 Fortran Syntactic Verification

3.2.1.2 Fortran file preprocessing

3.2.1.3 Fortran Split

3.2.1.4 Fortran Syntactic Preprocessing

3.2.2 C Preprocessing and Splitting

3.2.2.1 C Syntactic Verification

3.2.3 Source File Hierarchy

3.3 Source File

3.4 Regeneration of User Source Files

4 Abstract Syntax Tree

4.1 Entities

4.2 Parsed Code and Callees

4.2.1 Fortran

4.2.1.1 Fortran restrictions

4.2.1.2 Some additional remarks

4.2.1.3 Some unfriendly features

4.2.1.4 Declaration of the standard parser

4.2.2 Declaration of HPFC parser

4.2.3 Declaration of the C parsers

4.3 Controlized Code (Hierarchical Control Flow Graph)

5 Pedagogical phases

5.1 Using XML backend

5.2 Prepending a comment

5.3 Prepending a call

5.4 Add a pragma to a module

6 Analyses

6.1 Call Graph

6.2 Memory Effects

6.2.1 Proper Memory Effects

6.2.2 Filtered Proper Memory Effects

6.2.3 Cumulated Memory Effects

6.2.4 Summary Data Flow Information (SDFI)

6.2.5 IN and OUT Effects

6.2.6 Proper and Cumulated References

6.2.7 Effect Properties

6.3 Reductions

6.3.1 Reduction Propagation

6.3.2 Reduction Detection

6.4 Chains (Use-Def Chains)

6.4.1 Menu for Use-Def Chains

6.4.2 Standard Use-Def Chains (a.k.a. Atomic Chains)

6.4.3 READ/WRITE Region-Based Chains

6.4.4 IN/OUT Region-Based Chains

6.4.5 Chain Properties

6.4.5.1 Add use-use Chains

6.4.5.2 Remove Some Chains

6.5 Dependence Graph (DG)

6.5.1 Menu for Dependence Tests

6.5.2 Fast Dependence Test

6.5.3 Full Dependence Test

6.5.4 Semantics Dependence Test

6.5.5 Dependence Test with Convex Array Regions

6.5.6 Dependence Properties (Ricedg)

6.5.6.1 Dependence Test Selection

6.5.6.2 Statistics

6.5.6.3 Algorithmic Dependences

6.5.6.4 Printout

6.5.6.5 Optimization

6.6 Flinter

6.7 Loop statistics

6.8 Semantics Analysis

6.8.1 Transformers

6.8.1.1 Menu for Transformers

6.8.1.2 Fast Intraprocedural Transformers

6.8.1.3 Full Intraprocedural Transformers

6.8.1.4 Fast Interprocedural Transformers

6.8.1.5 Full Interprocedural Transformers

6.8.1.6 Full Interprocedural Transformers

6.8.2 Summary Transformer

6.8.3 Initial Precondition

6.8.4 Intraprocedural Summary Precondition

6.8.5 Preconditions

6.8.5.1 Menu for Preconditions

6.8.5.2 Intra-Procedural Preconditions

6.8.5.3 Fast Inter-Procedural Preconditions

6.8.5.4 Full Inter-Procedural Preconditions

6.8.6 Interprocedural Summary Precondition

6.8.7 Total Preconditions

6.8.7.0.1 Status:

6.8.7.1 Menu for Total Preconditions

6.8.7.2 Intra-Procedural Total Preconditions

6.8.7.3 Inter-Procedural Total Preconditions

6.8.8 Summary Total Precondition

6.8.9 Summary Total Postcondition

6.8.10 Final Postcondition

6.8.11 Semantic Analysis Properties

6.8.11.1 Value types

6.8.11.2 Array declarations and accesses

6.8.11.3 Flow Sensitivity

6.8.11.4 Context for statement and expression transformers

6.8.11.5 Interprocedural Semantics Analysis

6.8.11.6 Fix Point Operators

6.8.11.7 Normalization level

6.8.11.8 Prettyprint

6.8.11.9 Debugging

6.9 Continuation conditions

6.10 Complexities

6.10.1 Menu for Complexities

6.10.2 Uniform Complexities

6.10.3 Summary Complexity

6.10.4 Floating Point Complexities

6.10.5 Complexity properties

6.10.5.1 Debugging

6.10.5.2 Fine Tuning

6.10.5.3 Target Machine and Compiler Selection

6.10.5.4 Evaluation Strategy

6.11 Convex Array Regions

6.11.1 Menu for Convex Array Regions

6.11.2 MAY READ/WRITE Convex Array Regions

6.11.3 MUST READ/WRITE Convex Array Regions

6.11.4 Summary READ/WRITE Convex Array Regions

6.11.5 IN Convex Array Regions

6.11.6 IN Summary Convex Array Regions

6.11.7 OUT Summary Convex Array Regions

6.11.8 OUT Convex Array Regions

6.11.9 Properties for Convex Array Regions

6.12 Alias Analysis

6.12.1 Dynamic Aliases

6.12.2 Intraprocedural Summary Points to Analysis

6.12.3 Points to Analysis

6.12.4 Pointer Values Analyses

6.12.5 Properties for pointer analyses

6.12.6 Menu for Alias Views

6.13 Complementary Sections

6.13.1 READ/WRITE Complementary Sections

6.13.2 Summary READ/WRITE Complementary Sections

7 Parallelization and Distribution

7.1 Code Parallelization

7.1.1 Parallelization properties

7.1.1.1 Properties controlling Rice parallelization

7.1.2 Menu for Parallelization Algorithm Selection

7.1.3 Allen & Kennedy’s Parallelization Algorithm

7.1.4 Def-Use Based Parallelization Algorithm

7.1.5 Parallelization and Vectorization for Cray Multiprocessors

7.1.6 Coarse Grain Parallelization

7.1.7 Global Loop Nest Parallelization

7.1.8 Coerce Parallel Code into Sequential Code

7.1.9 Detect Computation Intensive Loops

7.1.10 Limit Parallelism in Parallel Loop Nests

7.2 SIMDizer for SIMD Multimedia Instruction Set

7.2.1 SIMD properties

7.2.1.1 Auto-Unroll

7.2.1.2 Memory Organisation

7.2.1.3 Pattern file

7.2.2 Scalopes project

7.2.2.1 Bufferization

7.2.2.2 SCMP generation

7.3 Code Distribution

7.3.1 Shared-Memory Emulation

7.3.2 HPF Compiler

7.3.2.1 HPFC Filter

7.3.2.2 HPFC Initialization

7.3.2.3 HPF Directive removal

7.3.2.4 HPFC actual compilation

7.3.2.5 HPFC completion

7.3.2.6 HPFC install

7.3.2.7 HPFC High Performance Fortran Compiler properties

7.3.3 STEP: MPI code generation from OpenMP programs

7.3.3.1 STEP Directives

7.3.3.2 STEP Analysis

7.3.3.3 STEP code generation

7.3.4 PHRASE: high-level language transformation for partial evaluation in reconfigurable logic

7.3.4.1 Phrase Distributor Initialisation

7.3.4.2 Phrase Distributor

7.3.4.3 Phrase Distributor Control Code

7.3.5 Safescale

7.3.5.1 Distribution init

7.3.5.2 Statement Externalization

7.3.6 CoMap: Code Generation for Accelerators with DMA

7.3.6.1 Phrase Remove Dependences

7.3.6.2 Phrase comEngine Distributor

7.3.6.3 PHRASE ComEngine properties

7.3.7 Parallelization for Terapix architecture

7.3.7.1 Isolate Statement

7.3.7.2 Delay Communications

7.3.7.3 Hardware Constraints Solver

7.3.7.4 kernelize

7.3.7.5 Generating communications

7.3.8 Code distribution on GPU

7.3.9 Task generation for SCALOPES project

8 Program Transformations

8.1 Loop Transformations

8.1.1 Introduction

8.1.2 Loop range Normalization

8.1.3 Loop Distribution

8.1.4 Statement Insertion

8.1.5 Loop Expansion

8.1.6 Loop Fusion

8.1.7 Index Set Splitting

8.1.8 Loop Unrolling

8.1.8.1 Regular Loop Unroll

8.1.8.2 Full Loop Unroll

8.1.9 Loop Fusion

8.1.10 Strip-mining

8.1.11 Loop Interchange

8.1.12 Hyperplane Method

8.1.13 Loop Nest Tiling

8.1.14 Symbolic Tiling

8.1.15 Loop Normalize

8.1.16 Guard Elimination and Loop Transformations

8.1.17 Tiling for sequences of loop nests

8.2 Redundancy Elimination

8.2.1 Loop Invariant Code Motion

8.2.2 Partial Redundancy Elimination

8.3 Control-Flow Optimizations

8.3.1 Dead Code Elimination

8.3.1.1 Dead Code Elimination properties

8.3.2 Dead Code Elimination (a.k.a. Use-Def Elimination)

8.3.3 Control Restructurers

8.3.3.1 Unspaghettify

8.3.3.2 Restructure Control

8.3.3.3 For-loop recovering

8.3.3.4 For-loop to do-loop transformation

8.3.3.5 For-loop to while-loop transformation

8.3.3.6 Do-while to while-loop transformation

8.3.3.7 Spaghettify

8.3.3.8 Full Spaghettify

8.3.4 Control Structure Normalisation (STF)

8.3.5 Trivial Test Elimination

8.3.6 Finite State Machine Generation

8.3.6.1 FSM Generation

8.3.6.2 Full FSM Generation

8.3.6.3 FSM Split State

8.3.6.4 FSM Merge States

8.3.6.5 FSM properties

8.3.7 Control Counters

8.4 Expression Transformations

8.4.1 Atomizers

8.4.1.1 General Atomizer

8.4.1.2 Limited Atomizer

8.4.1.3 Atomizer properties

8.4.2 Partial Evaluation

8.4.3 Reduction Detection

8.4.4 Reduction Replacement

8.4.5 Forward Substitution

8.4.6 Expression Substitution

8.4.7 Rename operator

8.4.8 Array to pointer conversion

8.4.9 Expression Optimizations

8.4.9.1 Expression optimization using algebraic properties

8.4.9.2 Common subexpression elimination

8.5 Hardware Accelerator

8.5.1 FREIA Software

8.5.2 FREIA SPoC

8.5.3 FREIA Terapix

8.5.4 FREIA OpenCL

8.6 Function Level Transformations

8.6.1 Inlining

8.6.2 Unfolding

8.6.3 Outlining

8.6.4 Cloning

8.7 Declaration Transformations

8.7.1 Declarations cleaning

8.7.2 Array Resizing

8.7.2.1 Top Down Array Resizing

8.7.2.2 Bottom Up Array Resizing

8.7.2.3 Array Resizing Statistic

8.7.2.4 Array Resizing properties

8.7.3 Scalarization

8.7.4 Induction Variable Substitution

8.7.5 Strength Reduction

8.7.6 Flatten Code

8.7.7 Split Update Operator

8.7.8 Split Initializations (C code)

8.7.9 Set Return Type

8.7.10 Cast at Call Sites

8.8 Array Bound Checking

8.8.1 Elimination of Redundant Tests: Bottom-Up Approach

8.8.2 Insertion of Unavoidable Tests

8.8.3 Interprocedural Array Bound Checking

8.8.4 Array Bound Checking Instrumentation

8.9 Alias Verification

8.9.1 Alias Propagation

8.9.2 Alias Checking

8.10 Used Before Set

8.11 Miscellaneous transformations

8.11.1 Type Checker

8.11.2 Scalar and Array Privatization

8.11.2.1 Scalar Privatization

8.11.2.2 Array Privatization

8.11.3 Scalar and Array Expansion

8.11.3.1 Scalar Expansion

8.11.3.2 Array Expansion

8.11.4 Freeze variables

8.11.5 Manual Editing

8.11.6 Transformation Test

8.12 Extensions Transformations

8.12.1 OpenMP Pragma

9 Output Files (Prettyprinted Files)

9.1 Parsed Printed Files (User View)

9.1.1 Menu for User Views

9.1.2 Standard User View

9.1.3 User View with Transformers

9.1.4 User View with Preconditions

9.1.5 User View with Total Preconditions

9.1.6 User View with Continuation Conditions

9.1.7 User View with Convex Array Regions

9.1.8 User View with Invariant Convex Array Regions

9.1.9 User View with IN Convex Array Regions

9.1.10 User View with OUT Convex Array Regions

9.1.11 User View with Complexities

9.1.12 User View with Proper Effects

9.1.13 User View with Cumulated Effects

9.1.14 User View with IN Effects

9.1.15 User View with OUT Effects

9.2 Printed File (Sequential Views)

9.2.1 Html output

9.2.2 Menu for Sequential Views

9.2.3 Standard Sequential View

9.2.4 Sequential View with Transformers

9.2.5 Sequential View with Initial Preconditions

9.2.6 Sequential View with Complexities

9.2.7 Sequential View with Preconditions

9.2.8 Sequential View with Total Preconditions

9.2.9 Sequential View with Continuation Conditions

9.2.10 Sequential View with Convex Array Regions

9.2.10.1 Sequential View with Plain Pointer Regions

9.2.10.2 Sequential View with Proper Pointer Regions

9.2.10.3 Sequential View with Invariant Pointer Regions

9.2.10.4 Sequential View with Plain Convex Array Regions

9.2.10.5 Sequential View with Proper Convex Array Regions

9.2.10.6 Sequential View with Invariant Convex Array Regions

9.2.10.7 Sequential View with IN Convex Array Regions

9.2.10.8 Sequential View with OUT Convex Array Regions

9.2.10.9 Sequential View with Privatized Convex Array Regions

9.2.11 Sequential View with Complementary Sections

9.2.12 Sequential View with Proper Effects

9.2.13 Sequential View with Cumulated Effects

9.2.14 Sequential View with IN Effects

9.2.15 Sequential View with OUT Effects

9.2.16 Sequential View with Proper Reductions

9.2.17 Sequential View with Cumulated Reductions

9.2.18 Sequential View with Static Control Information

9.2.19 Sequential View with Points-To Information

9.2.20 Sequential View with Simple Pointer Values

9.2.21 Prettyprint properties

9.2.21.1 Language

9.2.21.2 Layout

9.2.21.3 Target Language Selection

9.2.21.3.1 Parallel output style

9.2.21.3.2 Default sequential output style

9.2.21.4 Display Analysis Results

9.2.21.5 Display Internals for Debugging

9.2.21.5.1 Warning:

9.2.21.6 Declarations

9.2.21.7 FORESYS Interface

9.2.21.8 HPFC Prettyprinter

9.2.21.9 Interface to Emacs

9.3 Printed Files with the Intraprocedural Control Graph

9.3.1 Menu for Graph Views

9.3.2 Standard Graph View

9.3.3 Graph View with Transformers

9.3.4 Graph View with Complexities

9.3.5 Graph View with Preconditions

9.3.6 Graph View with Preconditions

9.3.7 Graph View with Regions

9.3.8 Graph View with IN Regions

9.3.9 Graph View with OUT Regions

9.3.10 Graph View with Proper Effects

9.3.11 Graph View with Cumulated Effects

9.3.12 ICFG properties

9.3.13 Graph properties

9.3.13.1 Interface to Graphics Prettyprinters

9.4 Parallel Printed Files

9.4.1 Menu for Parallel View

9.4.2 Fortran 77 Parallel View

9.4.3 HPF Directives Parallel View

9.4.4 OpenMP Directives Parallel View

9.4.5 Fortran 90 Parallel View

9.4.6 Cray Fortran Parallel View

9.5 Call Graph Files

9.5.1 Menu for Call Graphs

9.5.2 Standard Call Graphs

9.5.3 Call Graphs with Complexities

9.5.4 Call Graphs with Preconditions

9.5.5 Call Graphs with Total Preconditions

9.5.6 Call Graphs with Transformers

9.5.7 Call Graphs with Proper Effects

9.5.8 Call Graphs with Cumulated Effects

9.5.9 Call Graphs with Regions

9.5.10 Call Graphs with IN Regions

9.5.11 Call Graphs with OUT Regions

9.6 DrawGraph Interprocedural Control Flow Graph Files (DVICFG)

9.6.1 Menu for DVICFG’s

9.6.2 Minimal ICFG with graphical filtered Proper Effects

9.7 Interprocedural Control Flow Graph Files (ICFG)

9.7.1 Menu for ICFG’s

9.7.2 Minimal ICFG

9.7.3 Minimal ICFG with Complexities

9.7.4 Minimal ICFG with Preconditions

9.7.5 Minimal ICFG with Preconditions

9.7.6 Minimal ICFG with Transformers

9.7.7 Minimal ICFG with Proper Effects

9.7.8 Minimal ICFG with filtered Proper Effects

9.7.9 Minimal ICFG with Cumulated Effects

9.7.10 Minimal ICFG with Regions

9.7.11 Minimal ICFG with IN Regions

9.7.12 Minimal ICFG with OUT Regions

9.7.13 ICFG with Loops

9.7.14 ICFG with Loops and Complexities

9.7.15 ICFG with Loops and Preconditions

9.7.16 ICFG with Loops and Total Preconditions

9.7.17 ICFG with Loops and Transformers

9.7.18 ICFG with Loops and Proper Effects

9.7.19 ICFG with Loops and Cumulated Effects

9.7.20 ICFG with Loops and Regions

9.7.21 ICFG with Loops and IN Regions

9.7.22 ICFG with Loops and OUT Regions

9.7.23 ICFG with Control

9.7.24 ICFG with Control and Complexities

9.7.25 ICFG with Control and Preconditions

9.7.26 ICFG with Control and Total Preconditions

9.7.27 ICFG with Control and Transformers

9.7.28 ICFG with Control and Proper Effects

9.7.29 ICFG with Control and Cumulated Effects

9.7.30 ICFG with Control and Regions

9.7.31 ICFG with Control and IN Regions

9.7.32 ICFG with Control and OUT Regions

9.8 Dependence Graph File

9.8.1 Menu For Dependence Graph Views

9.8.2 Effective Dependence Graph View

9.8.3 Loop-Carried Dependence Graph View

9.8.4 Whole Dependence Graph View

9.8.5 Filtered Dependence Graph View

9.8.6 Filtered Dependence daVinci Graph View

9.8.7 Filtered Dependence Graph View

9.8.8 Chains Graph View

9.8.9 Chains Graph Graphviz Dot View

9.8.10 Dependence Graph Graphviz Dot View

9.8.11 Properties for Dot output

9.9 Fortran to C prettyprinter

9.9.1 Properties for Fortran to C prettyprinter

9.10 Prettyprinters Smalltalk

9.11 Prettyprinter for the Polyhderal Compiler Collection (PoCC)

9.12 Prettyprinter for CLAIRE

10 Feautrier Methods (a.k.a. Polyhedral Method)

10.1 Static Control Detection

10.2 Scheduling

10.3 Code Generation for Affine Schedule

10.4 Prettyprinters for CM Fortran

11 User Interface Menu Layouts

11.1 View menu

11.2 Transformation menu

12 Conclusion

13 Known Problems

Chapter 2

Global Options

Options are called properties in PIPS. Most of them are related to a specific phase, for instance the dependence graph computation. They are declared next to the corresponding phase declaration. But some are related to one library or even to several libraries and they are declared in this chapter.

Skip this chapter on first reading. Also skip this chapter on second reading because you are unlikely to need these properties until you develop in PIPS.

2.1 Fortran Loops

Are DO loops bodies executed at least once (F-66 style), or not (Fortran 77)?

ONE_TRIP_DO FALSE

is useful for use/def and semantics analysis but is not used for region analyses. This dangerous property should be set to FALSE. It is not consistently checked by PIPS phases, because nobody seems to use this obsolete Fortran feature anymore.

2.2 Logging

With

LOG_TIMINGS FALSE

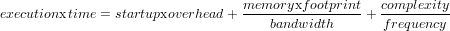

it is possible to display the amount of real, cpu and system times directly spent in each phase as well as the times spent reading/writing data structures from/to PIPS database. The computation of total time used to complete a pipsmake request is broken down into global times, a set of phase times which is the accumulation of the times spent in each phase, and a set of IO times, also accumulated through phases.

Note that the IO times are included in the phase times.

With

LOG_MEMORY_USAGE FALSE

it is possible to log the amount of memory used by each phase and by each request. This is mainly useful to check if a computation can be performed on a given machine. This memory log can also be used to track memory leaks. Valgrind may be more useful to track memory leaks.

2.3 PIPS Infrastructure

PIPS infrastructure is based on a few external libraries, Newgen and Linear, and on three key PIPS1 libraries:

- pipsdbm which manages resources such as code produced by PIPS and ensures persistance,

- pipsmake which ensures consistency within a workspace with respect to the producer-consumer rules declared in this file,

- and top-level which defines a common API for all PIPS user interfaces, whether human or API.

2.3.1 Newgen

Newgen offers some debugging support to check object consistency (gen_consistent_p and gen_defined_p), and for dynamic type checking. See Newgen documentation[?][?].

2.3.2 C3 Linear Library

This library is external and offers an independent debugging system.

The following properties specify how null (

SYSTEM_NULL "<null␣system>"

), undefined

SYSTEM_UNDEFINED "<undefined␣system>"

) or non feasible systems

SYSTEM_NOT_FEASIBLE "{0==-1}"

are prettyprinted by PIPS.

2.3.3 PipsMake

With

CHECK_RESOURCE_USAGE FALSE

it is possible to log and report differences between the set of resources actually read and written by the procedures called by pipsmake and the set of resources declared as read or written in pipsmake.rc file.

ACTIVATE_DEL_DERIVED_RES TRUE

controls the rule activation process that may delete from the database all the derived resources from the newly activated rule to make sure that non-consistent resources cannot be used by accident.

PIPSMAKE_CHECKPOINTS 0

controls how often resources should be saved and freed. 0 means never, and a positive value means every n applications of a rule. This feature was added to allow long big automatic tpips scripts that can coredump and be restarted latter on close to the state before the core. As another side effect, it allows to free the memory and to keep memory consumption as moderate as possible, as opposed to usual tpips runs which keep all memory allocated. Note that it should not be too often saved, because it may last a long time, especially when entities are considered on big workspaces. The frequency may be adapted in a script, rarely at the beginning to more often latter.

2.3.4 PipsDBM

Shell environment variables PIPSDBM_DEBUG_LEVEL can be set to ? to check object consistency when they are stored in the database, and to ? to check object consistency when they are stored or retrieved (in case an intermediate phase has corrupted some data structure unwillingly).

You can control what is done when a workspace is closed and resources are save. The

PIPSDBM_RESOURCES_TO_DELETE "obsolete"

property can be set to to ”obsolete” or to ”all”.

Note that it is not managed from pipsdbm but from pipsmake which knows what is obsolete or not.

2.3.5 Top Level Control

The top-level library is built on top of the pipsmake and pipsdbm libraries to factorize functions useful to build a PIPS user interface or API.

Property

USER_LOG_P TRUE

controls the logging of the session in the database of the current workspace. This log can be processed by PIPS utility logfile2tpips to generate automatically a tpips script which can be used to replay the current PIPS session, workspace by workspace, regardless of the PIPSuser interface used.

Property

ABORT_ON_USER_ERROR FALSE

specifies how user errors impact execution once the error message is printed on stderr: return and go ahead, usually when PIPS is used interactively, or core dump for debugging purposes and for script executions, especially non-regression tests.

Property

MAXIMUM_USER_ERROR 2

specifies the number of user error allowed before the programs brutally aborts.

Property

ACTIVE_PHASES "PRINT_SOURCE␣PRINT_CODE␣PRINT_PARALLELIZED77_CODE␣PRINT_CALL_GRAPH␣PRINT_ICFG␣TRANSFORMERS_INTER_FULL␣INTERPROCEDURAL_SUMMARY_PRECONDITION␣PRECONDITIONS_INTER_FULL␣ATOMIC_CHAINS␣RICE_SEMANTICS_DEPENDENCE_GRAPH␣MAY_REGIONS"

specifies which pipsmake phases should be used when several phases can be used to produce the same resource. This property is used when a workspace is created. A workspace is the database maintained by PIPS to contain all resources defined for a whole application or for the whole set of files used to create it.

Resources that create ambiguities for pipsmake are at least:

- parsed_printed_file

- printed_file

- callgraph_file

- icfg_file

- parsed_code, because several parsers are available

- transformers

- summary_precondition

- preconditions

- regions

- chains

- dg

This list must be updated according to new rules and new resources declared in this file. Note that no default parser is usually specified in this property, because it is selected automatically according to the source file suffixes when possible.

Until October 2009, the active phases were:

PRINT_CALL_GRAPH PRINT_ICFG TRANSFORMERS_INTRA_FAST

INTRAPROCEDURAL_SUMMARY_PRECONDITION

PRECONDITIONS_INTRA ATOMIC_CHAINS

RICE_FAST_DEPENDENCE_GRAPH MAY_REGIONS"

They still are used for the old non-regression tests.

2.3.6 Tpips Command Line Interface

tpips is one of PIPS user interfaces.

TPIPS_IS_A_SHELL FALSE

controls whether tpips should behave as an extended shell and consider any input command that is not a tpips command a Shell command.

This property is automatically set to TRUE when pyps is running.

PYPS FALSE

2.3.7 Warning Control

User warnings may be turned off. Definitely, this is not the default option! Most warnings must be read to understand surprising results. This property is used by library misc.

NO_USER_WARNING FALSE

By default, PIPS reports errors generated by system call stat which is used in library pipsdbm to check the time a resource has been written and hence its temporal consistency.

WARNING_ON_STAT_ERROR TRUE

2.3.8 Option for C Code Generation

The syntactic constraints of C89 have been eased for declarations in C99, where it is possible to intersperse statement declarations within executable statements. This property is used to request C89 compatible code generation.

C89_CODE_GENERATION FALSE

So the default option is to generate C99 code, which may be changed because it is likely to make the code generated by PIPS unparsable by PIPS.

There is no guarantee that each code generation phase is going to comply with this property. It is up to each developper to decide if this global property is to be used or not in his/her local phase.

Chapter 3

Input Files

3.1 User File

An input program is a set of user Fortran 77 or C source files and a name, called a workspace. The files are looked for in the current directory, then by using the colon-separated PIPS_SRCPATH variable for other directories where they might be found. The first occurrence of the file name in the ordered directories is chosen, which is consistent with PATH and MANPATH behaviour.

The source files are splitted by PIPS at the program initialization phase to produce one PIPS-private source file for each procedure, subroutine or function, and for each block data. A function like fsplit is used and the new files are stored in the workspace, which simply is a UNIX sub-directory of the current directory. These new files have names suffixed by .f.orig.

Since PIPS performs interprocedural analyses, it expects to find a source code file for each procedure or function called. Missing modules can be replaced by stubs, which can be made more or less precise with respect to their effects on formal parameters and global variables. A stub may be empty. Empty stubs can be automatically generated if the code is properly typed (see Section 3.3).

The user source files should not be edited by the user once PIPS has been started because these editions are not going to be taken into account unless a new workspace is created. But their preprocessed copies, the PIPS source files, safely can be edited while running PIPS. The automatic consistency mechanism makes sure that any information displayed to the user is consistent with the current state of the sources files in the workspace. These source files have names terminated by the standard suffix, .f.

New user source files should be automatically and completely re-built when the program is no longer under PIPS control, i.e. when the workspace is closed. An executable application can easily be regenerated after code transformations using the tpips1 interface and requesting the PRINTED_FILE resources for all modules, including compilation units in C:

display PRINTED_FILE[%ALL]

Note that compilation units can be left out with:

display PRINTED_FILE[%ALLFUNC]

In both cases with C source code, the order of modules may be unsuitable for direct recompilation and compilation units should be included anyway, but this is what is done by explicitly requesting the code regeneration as described in § 3.4.

Note that PIPS expects proper ANSI Fortran 77 code. Its parser was not designed to locate syntax errors. It is highly recommended to check source files with a standard Fortran compiler (see Section 3.2) before submitting them to PIPS.

3.2 Preprocessing and Splitting

3.2.1 Fortran case of preprocessing and splitting

The Fortran files specified as input to PIPS by the user are preprocessed in various ways.

3.2.1.1 Fortran Syntactic Verification

If the PIPS_CHECK_FORTRAN shell environment variable is defined to false or no or 0, the syntax of the source files is not checked by compiling it with a C compiler.If the PIPS_CHECK_FORTRAN shell environment variable is defined to true or yes or 1, the syntax of the file is checked by compiling it with a Fortran 77 compiler. If the PIPS_CHECK_FORTRAN shell environment variable is not defined, the check is performed according to CHECK_FORTRAN_SYNTAX_BEFORE_RUNNING_PIPS 3.2.1.1.

The Fortran compiler is defined by the PIPS_FLINT environment variable. If it is undefined, the default compiler is f77 -c -ansi).

In case of failure, a warning is displayed. Note that if the program cannot be compiled properly with a Fortran compiler, it is likely that many problems will be encountered within PIPS.

The next property also triggers this preliminary syntactic verification.

CHECK_FORTRAN_SYNTAX_BEFORE_RUNNING_PIPS TRUE

PIPS requires source code for all leaves in its visible call graph. By default, a user error is raised by Function initializer if a user request cannot be satisfied because some source code is missing. It also is possible to generate some synthetic code (also known as stubs) and to update the current module list but this is not a very satisfying option because all interprocedural analysis results are going to be wrong. The user should retrieve the generated .f files in the workspace, under the Tmp directory, and add some assignments (def ) and uses to mimic the action of the real code to have a sufficient behavior from the point of view of the analysis or transformations you want to apply on the whole program. The user modified synthetic files should then be saved and used to generate a new workspace.

If PREPROCESSOR_MISSING_FILE_HANDLING 3.2.1.1 is set to "query", a script can optionally be set to handle the interactive request using PREPROCESSOR_MISSING_FILE_GENERATOR 3.2.1.1. This script is passed the function name and prints the filename on standard output. When empty, it uses an internal one.

Valid settings: error or generate or query.

PREPROCESSOR_MISSING_FILE_HANDLING "error"

PREPROCESSOR_MISSING_FILE_GENERATOR ""

The generated stub can have various default effect, say to prevent over-optimistic parallelization.

STUB_MEMORY_BARRIER FALSE

STUB_IO_BARRIER FALSE

3.2.1.2 Fortran file preprocessing

If the file suffix is .F then the file is preprocessed. By default PIPS uses gfortran -E for Fortran files. This preprocessor can be changed by setting the PIPS_FPP environment variable.

Moreover the default preprocessing options are -P -D__PIPS__ -D__HPFC__ and they can be extended (not replaced...) with the PIPS_FPP_FLAGS environment variable.

3.2.1.3 Fortran Split

The file is then split into one file per module using a PIPS specialized version of fsplit2 . This preprocessing also handles

- Hollerith constants by converting them to the quoted syntax3 ;

- unnamed modules by adding MAIN000 or PROGRAM MAIN000 or or DATA000 or BLOCK DATA DATA000 according to needs.

The output of this phase is a set of .f_initial files in per-module subdirectories. They constitute the resource INITIAL_FILE.

3.2.1.4 Fortran Syntactic Preprocessing

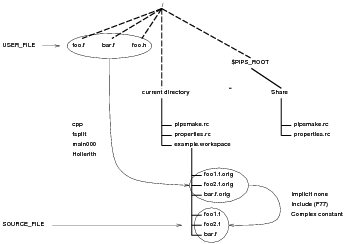

A second step of preprocessing is performed to produce SOURCE_FILE files with standard Fortran suffix .f from the .f_initial files. The two preprocessing steps are shown in Figure 3.1.

Each module source file is then processed by top-level to handle Fortran include and to comment out IMPLICIT NONE which are not managed by PIPS. Also this phase performs some transformations of complex constants to help the PIPS parser. Files referenced in Fortran include statements are looked for from the directory where the Fortran file is. The Shell variable PIPS_CPP_FLAGS is not used to locate these include files.

3.2.2 C Preprocessing and Splitting

The C preprocessor is applied before the splitting. By default PIPS uses cpp -C for C files. This preprocessor can be changed by setting the PIPS_CPP environment variable.

Moreover the -D__PIPS__ -D__HPFC__ -U__GNUC__ preprocessing options are used and can be extended (not replaced) with the PIPS_CPP_FLAGS environment variable.

This PIPS_CPP_FLAGS variable can also be used to locate the include files. Directories to search are specified with the -Ifile option, as usual for the C preprocessor.

3.2.2.1 C Syntactic Verification

If the PIPS_CHECK_C shell environment variable is defined to false or no or 0, the syntax of the source files is not checked by compiling it with a C compiler. If the PIPS_CHECK_C shell environment variable is defined to true or yes or 1, the syntax of the file is checked by compiling it with a C compiler. If the PIPS_CHECK_C shell environment variable is not defined, the check is performed according to CHECK_C_SYNTAX_BEFORE_RUNNING_PIPS 3.2.2.1.

The environment variable PIPS_CC is used to define the C compiler available. If it is undefined, the compiler chosen is gcc -c ).

In case of failure, a warning is displayed.

If the environement variable PIPS_CPP_FLAGS is defined, it should contain the options -Wall and -Werror for the check to be effective.

The next property also triggers this preliminary syntactic verification.

CHECK_C_SYNTAX_BEFORE_RUNNING_PIPS TRUE

Although its default value is FALSE, it is much safer to set it to true when dealing with new sources files. PIPS is not designed to process non-standard source code. Bugs in source files are not well explained or localized. They can result in weird behaviors and inexpected core dumps. Before complaining about PIPS, it is higly recommended to set this property to TRUE.

Note: the C and Fortran syntactic verifications could be controlled by a unique property.

3.2.3 Source File Hierarchy

The source files may be placed in different directories and have the same name, which makes resource management more difficult. The default option is to assume that no file name conflicts occur. This is the historical option and it leads to much simpler module names.

PREPROCESSOR_FILE_NAME_CONFLICT_HANDLING FALSE

3.3 Source File

A source_file contains the code of exactly one module. Source files are created from user source files at program initialization by fsplit or a similar function if fsplit is not available (see Section 3.2). A source file may be updated by the user4 , but not by PIPS. Program transformations are performed on the internal representation (see 4) and visible in the prettyprinted output (see 9).

Source code splitting and preprocessing, e.g. cpp, are performed by the function create_workspace() from the top-level library, in collaboration with db_create_workspace() from library pipsdbm which creates the workspace directory. The user source files have names suffixed by .f or .F if cpp must be applied. They are split into original user_files with suffix .f.orig. These so-called original user files are in fact copies stored in the workspace. The syntactic PIPS preprocessor is applied to generate what is known as a source_file by PIPS. This process is fully automatized and not visible from PIPS user interfaces. However, the cpp preprocessor actions can be controlled using the Shell environment variable PIPS_CPP_FLAGS.

Function initializer is only called when the source code is not found. If the user code is properly typed, it is possible to force initializer to generate empty stubs by setting properties PREPROCESSOR_MISSING_FILE_HANDLING 3.2.1.1 and, to avoid inconsistency, PARSER_TYPE_CHECK_CALL_SITES 4.2.1.4. But remember that many Fortran codes use subroutines with variable numbers of arguments and with polymorphic types. Fortran varargs mechanism can be achieved by using or not the second argument according to the first one. Polymorphism can be useful to design an IO package or generic array subroutine, e.g. a subroutine setting an array to zero or a subroutine to copy an array into another one.

The current default option is to generate a user error if some source code is missing. This decision was made for two reasons:

- too many warnings about typing are generated as soon as polymorphism is used;

- analysis results and code transformations are potentially wrong because no memory effects are synthesized

Sometimes, a function happen to be defined (and not only declared) inside a header file with the inline keyword. In that case PIPS can consider it as a regular module or just ignore it, as its presence may be system-dependant. Property IGNORE_FUNCTION_IN_HEADER 3.3 control this behavior and must be set before workspace creation.

IGNORE_FUNCTION_IN_HEADER TRUE

Modules can be flagged as “stubs”, aka functions provided to PIPS but which shouldn’t be inlined or modified. Property PREPROCESSOR_INITIALIZER_FLAG_AS_STUB 3.3 controls if the initializer should declare new files as stubs.

< PROGRAM.stubs

PREPROCESSOR_INITIALIZER_FLAG_AS_STUB TRUE

> MODULE.initial_file

Note: the generation of the resource user_file here above is mainly directed in having the resource concept here. More thought is needed to have the concept of user files managed by pipsmake.

MUST appear after initializer:

< MODULE.initial_file

< MODULE.user_file

In C, the initializer can generate directly a c_source_file and its compilation unit.

> COMPILATION_UNIT.c_source_file

3.4 Regeneration of User Source Files

The unsplit 3.4 phase regenerates user files from available printed_file. The various modules that where initially stored in single file are appended together in a file with the same name. Not that just fsplit is reversed, not a preprocessing through cpp. Also the include file preprocessing is not reversed.

Regeneration of user files. The various modules that where initially stored in single file are appended together in a file with the same name.

alias unsplit ’User files Regeneration’

unsplit > PROGRAM.user_file

< ALL.user_file

< ALL.printed_file

Chapter 4

Abstract Syntax Tree

The abstract syntax tree, a.k.a intermediate representation, a.k.a. internal representation, is presented in [?] and in PIPS Internal Representation of Fortran and C code1 .

4.1 Entities

Program entities are stored in PIPS unique symbol table2 , called entities. Fortran entities, like intrinsics and operators, are created by bootstrap at program initialization. The symbol table is updated with user local and global variables when modules are parsed or linked together. This side effect is not disclosed to pipsmake.

The entity data structure is described in PIPS Internal Representation of Fortran and C code3 .

The declaration of new intrinsics is not easy because it was assumed that there number was fixed and limited by the Fortran standard. In fact, Fortran extensions define new ones. To add a new intrinsic, C code in bootstrap/bootstrap.c and in effects-generic/intrinsics.c must be added to declare its name, type and Read/Write memory effects.

Information about entities generated by the parsers is printed out conditionally to property: PARSER_DUMP_SYMBOL_TABLE 4.2.1.4. which is set to false by default. Unless you are debugging the parser, do not set this property to TRUE but display the symbol table file. See Section 4.2.1.4 for Fortran and Section 4.2.3 for C.

4.2 Parsed Code and Callees

Each module source code is parsed to produce an internal representation called parsed_code and a list of called module names, callees.

4.2.1 Fortran

Source code is assumed to be fully Fortran-77 compliant. On the first encountered error, the parser may be able to emit a useful message or the non-analyzed part of the source code is printed out.

PIPS input language is standard Fortran 77 with few extensions and some restrictions. The input character set includes underscore, _, and varying length variable names, i.e. they are not restricted to 6 characters.

4.2.1.1 Fortran restrictions

- ENTRY statements are not recognized and a user error is generated. Very few cases of this obsolete feature were encountered in the codes initially used to benchmark PIPS. ENTRY statements have to be replaced manually by SUBROUTINE or FUNCTION and appropriate commons. If the parser bumps into a call to an ENTRY point, it may wrongly diagnose a missing source code for this entry, or even generate a useless but pipsmake satisfying stub if the corresponding property has been set (see Section 3.3).

- Multiple returns are not in PIPS Fortran.

- ASSIGN and assigned GOTO are not in PIPS Fortran.

- Computed GOTOs are not in PIPS Fortran. They are automatically replaced by a IF...ELSEIF...ENDIF construct in the parser.

- Functional formal parameters are not accepted. This is deeply exploited in pipsmake.

- Integer PARAMETERs must be initialized with integer constant expressions because conversion functions are not implemented.

- DO loop headers should have no label. Add a CONTINUE just before the loop when it happens. This can be performed automatically if the property PARSER_SIMPLIFY_LABELLED_LOOPS 4.2.1.4 is set to TRUE. This restriction is imposed by the parallelization phases, not by the parser.

- Complex constants, e.g. (0.,1.), are not directly recognized by the parser. They must be replaced by a call to intrinsic CMPLX. The PIPS preprocessing replaces them by a call to COMPLX_.

- Function formulae are not recognized by the parser. An undeclared array

and/or an unsupported macro is diagnosed. They may be substituted in an

unsafe way by the preprocessor if the property

PARSER_EXPAND_STATEMENT_FUNCTIONS 4.2.1.4

is set. If the substitution is considered possibly unsafe, a warning is displayed.

These parser restrictions were based on funding constraints. They are mostly alleviated by the preprocessing phase. PerfectClub and SPEC-CFP95 benchmarks are handled without manual editing, but for ENTRY statements which are obsoleted by the current Fortran standard.

4.2.1.2 Some additional remarks

- The PIPS preprocessing stage included in fsplit() is going to name unnamed modules MAIN000 and unnamed blockdata DATA000 to be consistent with the generated file name.

- Hollerith constants are converted to a more readable quoted form, and then output as such by the prettyprinter.

4.2.1.3 Some unfriendly features

- Source code is read in columns 1-72 only. Lines ending in columns 73 and beyond usually generate incomprehensible errors. A warning is generated for lines ending after column 72.

- Comments are carried by the following statement. Comments carried by RETURN, ENDDO, GOTO or CONTINUE statements are not always preserved because the internal representation transforms these statements or because the parallelization phase regenerates some of them. However, they are more likely to be hidden by the prettyprinter. There is a large range of prettyprinter properties to obtain less filtered view of the code.

- Formats and character constants are not properly handled. Multi-line formats and constants are not always reprinted in a Fortran correct form.

- Declarations are exploited on-the-fly. Thus type and dimension information must be available before common declaration. If not, wrong common offsets are computed at first and fixed later in Function EndOfProcedure). Also, formal arguments implicitly are declared using the default implicit rule. If it is necessary to declare them, this new declarations should occur before an IMPLICIT declaration. Users are surprised by the type redefinition errors displayed.

4.2.1.4 Declaration of the standard parser

> MODULE.callees

< PROGRAM.entities

< MODULE.source_file

For parser debugging purposes, it is possible to print a summary of the symbol table, when enabling this property:

PARSER_DUMP_SYMBOL_TABLE FALSE

This should be avoided and the resource symbol_table_file be displayed instead.

The prettyprint of the symbol table for a Fortran module is generated with:

< PROGRAM.entities

< MODULE.parsed_code

Input Format

Some subtle errors occur because the PIPS parser uses a fixed format. Columns 73 to 80 are ignored, but the parser may emit a warning if some characters are encountered in this comment field.

PARSER_WARN_FOR_COLUMNS_73_80 TRUE

ANSI extension

PIPS has been initially developed to parse correct Fortran compliant programs only. Real applications use lots of ANSI extensions… and they are not always correct! To make sure that PIPS output is correct, the input code should be checked against ANSI extensions using property

CHECK_FORTRAN_SYNTAX_BEFORE_PIPS

(see Section 3.2) and the property below should be set to false.

PARSER_ACCEPT_ANSI_EXTENSIONS TRUE

Currently, this property is not used often enough in PIPS parser which let go many mistakes... as expected by real users!

Array range extension

PIPS has been developed to parse correct Fortran-77 compliant programs only. Array ranges are used to improve readability. They can be generated by PIPS prettyprinter. They are not parsed as correct input by default.

PARSER_ACCEPT_ARRAY_RANGE_EXTENSION FALSE

Type Checking

Each argument list at calls to a function or a subroutine is compared to the functional type of the callee. Turn this off if you need to support variable numbers of arguments or if you use overloading and do not want to hear about it. For instance, an IO routine can be used to write an array of integers or an array of reals or an array of complex if the length parameter is appropriate.

Since the functional typing is shaky, let’s turn it off by default!

PARSER_TYPE_CHECK_CALL_SITES FALSE

Loop Header with Label

The PIPS implementation of Allen&Kennedy algorithm cannot cope with labeled DO loops because the loop, and hence its label, may be replicated if the loop is distributed. The parser can generate an extra CONTINUE statement to carry the label and produce a label-free loop. This is not the standard option because PIPS is designed to output code as close as possible to the user source code.

PARSER_SIMPLIFY_LABELLED_LOOPS FALSE

Most PIPS analyses work better if do loop bounds are affine. It is sometimes possible to improve results for non-affine bounds by assigning the bound to an integer variables and by using this variable as bound. But this is implemented for Fortran, but not for C.

PARSER_LINEARIZE_LOOP_BOUNDS FALSE

Entry

The entry construct can be seen as an early attempt at object-oriented programming. The same object can be processed by several function. The object is declared as a standard subroutine or function and entry points are placed in the executable code. The entry points have different sets of formal parameters, they may share some common pieces of code, they share the declared variables, especially the static ones.

The entry mechanism is dangerous because of the flow of control between entries. It is now obsolete and is not analyzed directly by PIPS. Instead each entry may be converted into a first class function or subroutine and static variables are gathered in a specific common. This is the default option. If the substitution is not acceptable, the property may be turned off and entries results in a parser error.

PARSER_SUBSTITUTE_ENTRIES TRUE

Alternate Return

Alternate returns are put among the obsolete Fortran features by the Fortran 90 standard. It is possible (1) to refuse them (option ”NO”), or (2) to ignore them and to replace alternate returns by STOP (option ”STOP”), or (3) to substitute them by a semantically equivalent code based on return code values (option ”RC” or option ”HRC”). Option (2) is useful if the alternate returns are used to propagate error conditions. Option (3) is useful to understand the impact of the alternate returns on the control flow graph and to maintain the code semantics. Option ”RC” uses an additional parameter while option ”HRC” uses a set of PIPS run-time functions to hide the set and get of the return code which make declaration regeneration less useful. By default, the first option is selected and alternate returns are refused.

To produce an executable code, the declarations must be regenerated: see property PRETTYPRINT_ALL_DECLARATIONS 9.2.21.6 in Section 9.2.21.6. This is not necessary with option ”HRC”. Fewer new declarations are needed if variable PARSER_RETURN_CODE_VARIABLE 4.2.1.4 is implicitly integer because its first letter is in the I-N range.

With option (2), the code can still be executed if alternate returns are used only for errors and if no errors occur. It can also be analyzed to understand what the normal behavior is. For instance, OUT regions are more likely to be exact when exceptions and errors are ignored.

Formal and actual label variables are replaced by string variables to preserve the parameter ordre and as much source information as possible. See PARSER_FORMAL_LABEL_SUBSTITUTE_PREFIX 4.2.1.4 which is used to generate new variable names.

PARSER_SUBSTITUTE_ALTERNATE_RETURNS "NO"

PARSER_RETURN_CODE_VARIABLE "I_PIPS_RETURN_CODE_"

PARSER_FORMAL_LABEL_SUBSTITUTE_PREFIX "FORMAL_RETURN_LABEL_"

The internal representation can be hidden and the alternate returns can be prettyprinted at the call sites and modules declaration by turning on the following property:

PRETTYPRINT_REGENERATE_ALTERNATE_RETURNS FALSE

Using a mixed C / Fortran RI is troublesome for comments handling: sometimes the comment guard is stored in the comment, sometime not. Sometimes it is on purpose, sometimes it is not. When following property is set to true, PIPS4 does its best to prettyprint comments correctly.

PRETTYPRINT_CHECK_COMMENTS TRUE

If all modules have been processed by PIPS, it is possible not to regenerate alternate returns and to use a code close to the internal representation. If they are regenerated in the call sites and module declaration, they are nevertheless not used by the code generated by PIPS which is consistent with the internal representation.

Here is a possible implementation of the two PIPS run-time subroutines required by the hidden return code (”HRC”) option:

subroutine SET_I_PIPS_RETURN_CODE_(irc)

common /PIPS_RETURN_CODE_COMMON/irc_shared

irc_shared = irc

end

subroutine GET_I_PIPS_RETURN_CODE_(irc)

common /PIPS_RETURN_CODE_COMMON/irc_shared

irc = irc_shared

end

Note that the subroutine names depend on the PARSER_RETURN_CODE_VARIABLE 4.2.1.4 property. They are generated by prefixing it with SET_ and GET_. There implementation is free. The common name used should not conflict with application common names. The ENTRY mechanism is not used because it would be desugared by PIPS anyway.

Assigned GO TO

By default, assigned GO TO and ASSIGN statements are not accepted. These constructs are obsolete and will not be part of future Fortran standards.

However, it is possible to replace them automatically in a way similar to computed GO TO. Each ASSIGN statement is replaced by a standard integer assignment. The label is converted to its numerical value. When an assigned GO TO with its optional list of labels is encountered, it is transformed into a sequence of logical IF statement with appropriate tests and GO TO’s. According to Fortran 77 Standard, Section 11.3, Page 11-2, the control variable must be set to one of the labels in the optional list. Hence a STOP statement is generated to interrupt the execution in case this happens, but note that compilers such as SUN f77 and g77 do not check this condition at run-time (it is undecidable statically).

PARSER_SUBSTITUTE_ASSIGNED_GOTO FALSE

Assigned GO TO without the optional list of labels are not processed. In other words, PIPS make the optional list mandatory for substitution. It usually is quite easy to add manually the list of potential targets.

Also, ASSIGN statements cannot be used to define a FORMAT label. If the desugaring option is selected, an illegal program is produced by PIPS parser.

Statement Function

This property controls the processing of Fortran statement functions by text substitution in the parser. No other processing is available and the parser stops with an error message when a statement function declaration is encountered.

The default used to be not to perform this unchecked replacement, which might change the semantics of the program because type coercion is not enforced and actual parameters are not assigned to intermediate variables. However most statement functions do not require these extra-steps and it is legal to perform the textual substitution. For user convenience, the default option is textual substitution.

Note that the parser does not have enough information to check the validity of the transformation, but a warning is issued if legality is doubtful. If strange results are obtained when executing codes transformed with PIPS, his property should be set to false.

A better method would be to represent them somehow a local functions in the internal representation, but the implications for pipsmake and other issues are clearly not all foreseen…(Fabien Coelho).

PARSER_EXPAND_STATEMENT_FUNCTIONS TRUE

4.2.2 Declaration of HPFC parser

This parser takes a different Fortran file but applies the same processing as the previous parser. The Fortran file is the result of the preprocessing by the hpfc_filter 7.3.2.1 phase of the original file in order to extract the directives and switch them to a Fortran 77 parsable form. As another side-effect, this parser hides some callees from pipsmake. This callees are temporary functions used to encode HPF directives. Their call sites are removed from the code before requesting full analyses to PIPS. This parser is triggered automatically by the hpfc_close 7.3.2.5 phase when requested. It should never be selected or activated by hand.

> MODULE.callees

< PROGRAM.entities

< MODULE.hpfc_filtered_file

4.2.3 Declaration of the C parsers

A C file is seen in PIPS as a compilation unit, that contains all the objects declarations that are global to this file, and as many as module (function or procedure) definitions defined in this file.

Thus the compilation unit contains the file-global macros, the include statements, the local and global variable definitions, the type definitions, and the function declarations if any found in the C file.

When the PIPS workspace is created by PIPS preprocessor, each C file is preprocessed5 using for instance gcc -E6 and broken into a new which contains only the file-global variable declarations, the function declarations and the type definitions, and one C file for each C function defined in the initial C file.

The new compilation units must be parsed before the new files, containing each one exactly one function definition, can be parsed. The new compilation units are named like the initial file names but with a bang extension.

For example, considering a C file foo.c with 2 function definitions:

typedef float data_t;

data_t matrix[N][N];

extern int errno;

int calc(data_t a[N][N]) {

[...]

}

int main(int argc, char *argv[]) {

[..]

}

After preprocessing, it leads to a file foo.cpp_processed.c that is then split into a new foo!.cpp_processed.c compilation unit containing

typedef float data_t;

data_t matrix[N][N];

extern int errno;

int calc(data_t a[N][N]);}

int main(int argc, char *argv[]);

and 2 module files containing the definitions of the 2 functions, a calc.c

and a main.c

Note that it is possible to have an empty compilation unit and no module file if the original file does not contain sensible C informations (such as an empty file containing only blank characters and so on).

< COMPILATION_UNIT.c_source_file

The resource COMPILATION_UNIT.declarations produced by compilation_unit_parser is a special resource used to force the parsing of the new compilation unit before the parsing of its associated functions. It is in fact a hash table containing the file-global C keywords and typedef names defined in each compilation unit.

In fact phase compilation_unit_parser also produces parsed_code and callees resources for the compilation unit. This is done to work around the fact that rule c_parser was invoked on compilation units by later phases, in particular for the computation of initial preconditions, breaking the declarations of function prototypes. These two resources are not declared here because pipsmake gets confused between the different rules to compute parsed code : there is no simple way to distinguish between compilation units and modules at some times and handling them similarly at other times.

> MODULE.callees

< PROGRAM.entities

< MODULE.c_source_file

< COMPILATION_UNIT.declarations

If you want to parse some C code using tpips, it is possible to select the C parser with

PRETTYPRINT_STATEMENT_NUMBER FALSE

PRETTYPRINT_BLOCK_IF_ONLY TRUE

A prettyprint of the symbol table for a C module can be generated with

< PROGRAM.entities

< MODULE.parsed_code

The EXTENDED_VARIABLE_INFORMATION 4.2.3 property can be used to extend the information available for variables. By default the entity name, the offset and the size are printed. Using this property the type and the user name, which may be different from the internal name, are also displayed.

EXTENDED_VARIABLE_INFORMATION FALSE

The C_PARSER_RETURN_SUBSTITUTION 4.2.3 property can be used to handle properly multiple returns within one function. The current default value is false, which preserves best the source aspect but modifies the control flow because the calls to return are assumed to flow in sequence. If the property is set to true, C return statement are replaced, when necessary, either by a simple goto for void functions, or by an assignment of the returned value to a special variable and a goto. A unique return statement is placed at the syntactic end of the function. For functions with no return statement or with a unique return statement placed at the end of their bodies, this property is useless.

C_PARSER_RETURN_SUBSTITUTION FALSE

The C99 for-loop with a declaration such as for(int i = a;...;...) can be represented in the RI with a naive representation such as:

This is done when the C_PARSER_GENERATE_NAIVE_C99_FOR_LOOP_DECLARATION 4.2.3 property is set to TRUE

C_PARSER_GENERATE_NAIVE_C99_FOR_LOOP_DECLARATION FALSE

Else, we can generate more or less other representation. For example, with some declaration splitting, we can generate a more representative version:

if C_PARSER_GENERATE_COMPACT_C99_FOR_LOOP_DECLARATION ?? property set to FALSE.

C_PARSER_GENERATE_COMPACT_C99_FOR_LOOP_DECLARATION FALSE

Else, we can generate a more compact (but newer representation that can choke some parts of PIPS7 ...) like:

This representation is not yet implemented.

4.3 Controlized Code (Hierarchical Control Flow Graph)

PIPS analyses and transformations take advantage of a hierarchical control flow graph (HCFG), which preserves structured part of code as such, and uses a control flow graph only when no syntactic representation is available (see [?]). The encoding of the relationship between structured and unstructured parts of code is explained elsewhere, mainly in the PIPS Internal Representation of Fortran and C code8 .

The controlizer 4.3 is the historical controlizer phase that removes GOTO statements in the parsed code and generates a similar representation with small CFGs. It was developped for Fortran 77 code.

The Fortran controlizer phase was too hacked and undocumented to be improved and debugged for C99 code so a new version has been developed, documented and is designed to be simpler and easier to understand. But, for comparison, the Fortran controlizer phase can still be used.

< PROGRAM.entities

< MODULE.parsed_code

For debugging and validation purpose, by setting at most one of the PIPS_USE_OLD_CONTROLIZER or PIPS_USE_NEW_CONTROLIZER environment variables, you can force the use of the specific version of the controlizer you want to use. This override the setting by activateRonan?.

Note that the controlizer choice impacts the HCFG when Fortran entries are used. If you do not know what Fortran entries are, it is deprecated stuff anyway... ☺

The new_controlizer 4.3 removes GOTO statements in the parsed code and generates a similar representation with small CFGs. It is designed to work according to C and C99 standards. Sequences of sequence and variable declarations are handled properly. However, the prettyprinter is tuned for code generated by controlizer 4.3, which does not always minimize the number of goto statements regenerated.

The hierarchical control flow graph built by the controlizer 4.3 is pretty crude. The partial control flow graphs, called unstructured statements, are derived from syntactic constructs. The control scope of an unstructured is the smallest enclosing structured construct, whether a loop, a test or a sequence. Thus some statements, which might be seen as part of structured code, end up as nodes of an unstructured.

Note that sequences of statements are identified as such by controlizer 4.3. Each of them appears as a unique node.

Also, useless CONTINUE statements may be added as provisional landing pads and not removed. The exit node should never have successors but this may happen after some PIPS function calls. The exit node, as well as several other nodes, also may be unreachable. After clean up, there should be no unreachable node or the only unreachable node should be the exit node. Function unspaghettify 8.3.3.1 (see Section 8.3.3.1) is applied by default to clean up and to reduce the control flow graphs after controlizer 4.3.

The GOTO statements are transformed in arcs but also in CONTINUE statements to preserve as many user comments as possible.

The top statement of a module returned by the controlizer 4.3 used to contain always an unstructured instruction with only one node. Several phases in PIPS assumed that this always is the case, although other program transformations may well return any kind of top statement, most likely a block. This is no longer true. The top statement of a module may contain any kind of instruction.

Here is declared the C and C99 controlizer:

< PROGRAM.entities

< MODULE.parsed_code

Control restructuring eliminates empty sequences but as empty true or false branch of structured IF. This semantic property of PIPS Internal Representation of Fortran and C code9 is enforced by libraries effects, regions, hpfc, effects-generic.

WARN_ABOUT_EMPTY_SEQUENCES FALSE

By unsetting this property unspaghettify 8.3.3.1 is not applied implicitly in the controlizer phase.

UNSPAGHETTIFY_IN_CONTROLIZER TRUE

The next property is used to convert C for loops into C while loops. The purpose is to speed up the re-use of Fortran analyses and transformation for C code. This property is set to false by default and should ultimately disappear. But for new user convenience, it is set to TRUE by activate_language() when the language is C.

FOR_TO_WHILE_LOOP_IN_CONTROLIZER FALSE

The next property is used to convert C for loops into C do loops when syntactically possible. The conversion is not safe because the effect of the loop body on the loop index is not checked. The purpose is to speed up the re-use of Fortran analyses and transformation for C code. This property is set to false by default and should disappear soon. But for new user convenience, it is set to TRUE by activate_language() when the language is C.

FOR_TO_DO_LOOP_IN_CONTROLIZER FALSE

This can also explicitly applied by calling the phase described in § 8.3.3.4.

FORMAT Restructuring

To able deeper code transformation, FORMATs can be gathered at the very beginning of the code or at the very end according to the following options in the unspaghettify or control restructuring phase.

GATHER_FORMATS_AT_BEGINNING FALSE

GATHER_FORMATS_AT_END FALSE

Clean Up Sequences

To display the statistics about cleaning-up sequences and removing useless CONTINUE or empty statement.

CLEAN_UP_SEQUENCES_DISPLAY_STATISTICS FALSE

There is a trade-off between keeping the comments associated to labels and goto and the cleaning that can be do on the control graph.

By default, do not fuse empty control nodes that have labels or comments:

FUSE_CONTROL_NODES_WITH_COMMENTS_OR_LABEL FALSE

Chapter 5

Pedagogical phases

Although this phases should be spread elsewhere in this manual, we have put some pedagogical phases useful to jump into PIPS first.

5.1 Using XML backend

A phase that displays, in debug mode, statements matching an XPath expression on the internal representation:

simple_xpath_test > MODULE.code

< PROGRAM.entities

< MODULE.code

5.2 Prepending a comment

Prepends a comment to the first statement of a module. Useful to apply post-processing after PIPS.

prepend_comment > MODULE.code

< PROGRAM.entities

< MODULE.code

The comment to add is selected by this property:

PREPEND_COMMENT "/*␣This␣comment␣is␣added␣by␣PREPEND_COMMENT␣phase␣*/"

5.3 Prepending a call

This phase inserts a call to function MY_TRACK just before the first statement of a module. Useful as a pedagogical example to explore the internal representation and Newgen. Not to be used for any pratical purpose as it is bugged. Debugging it is a pedagogical exercise.

prepend_call > MODULE.code

> MODULE.callees

< PROGRAM.entities

< MODULE.code

The called function could be defined by this property:

PREPEND_CALL "MY_TRACK"

but it is not.

5.4 Add a pragma to a module

This phase prepend or appends a pragma to a module.

add_pragma > MODULE.code

< PROGRAM.entities

< MODULE.code

The pragma name can be defined by this property:

PRAGMA_NAME "MY_PRAGMA"

The pragma can be append or prepend thanks to this property:

PRAGMA_PREPEND TRUE

Remove labels that are not usefull

< PROGRAM.entities

< MODULE.code

Loop labels can be kept thanks to this property:

REMOVE_USELESS_LABEL_KEEP_LOOP_LABEL FALSE

Chapter 6

Analyses

Analyses encompass the computations of call graphs, the memory effects, reductions, use-def chains, dependence graphs, interprocedural checks (flinter), semantics information (transformers and preconditions), continuations, complexities, convex array regions, dynamic aliases and complementary regions.

6.1 Call Graph

All lists of callees are needed to build the global lists of callers for each module. The callers and callees lists are used by pipsmake to control top-down and bottom-up analyses. The call graph is assumed to be a DAG, i.e. no recursive cycle exists, but it is not necessarily connected.

The height of a module can be used to schedule bottom-up analyses. It is zero if the module has no callees. Else, it is the maximal height of the callees plus one.

The depth of a module can be used to schedule top-down analyses. It is zero if the module has no callers. Else, it it the maximal depth of the callers plus one.

> ALL.height

> ALL.depth

< ALL.callees

The following pass generates a uDrawGraph1 version of the callgraph. Its quite partial since it should rely on an hypothetical all callees, direct and indirect, resource.

alias graph_of_calls ’For current module’

alias full_graph_of_calls ’For all modules’

graph_of_calls > MODULE.dvcg_file

< ALL.callees

full_graph_of_calls > PROGRAM.dvcg_file

< ALL.callees

6.2 Memory Effects

The data structures used to represent memory effects and their computation are described in [?]. Another description is available on line, in PIPS Internal Representation of Fortran and C code2 Technical Report.

Note that the standard name in the Dragon book is likely to be Gen and Kill sets in the standard data flow analysis framework, but PIPS uses the more general concept of effect developped by P. Jouvelot and D. Gifford [?] and its analyses are mostly based on the abstract syntac tree (AST) rather than the control flow graph (CFG).

6.2.1 Proper Memory Effects